In February and March, the artworks MoSS and Climate Change Smart Speakers (CCSS) were exhibited at the “Particle+Wave” media art exhibition hosted by Emmedia in Calgary, Canada. Opening on February 28, the exhibition attracted a diverse audience, including tech enthusiasts, professionals, and students. The exhibition featured international artists from France, Serbia, and Canadian cities such as Vancouver and Montreal, creating a vibrant dialogue around contemporary media art.

The response to both CCSS and MoSS was exceptionally positive, sparking engaging discussions about artificial voices, sound synthesis, climate change, artificial intelligence, and energy consumption, among other pressing topics. These conversations underscored the potential and implications of integrating advanced technology with artistic expression.

Alongside the exhibition, artist Søren Lyngsø Knudsen hosted a speculative design workshop aimed at exploring the design and conceptual development processes behind MoSS and CCSS. The goal was to stimulate critical reflections and generate new speculative concepts beneficial to humanity while fostering broader discussions on themes of technology, sustainability, surveillance, and wellbeing.

The workshop was introduced by Søren with a compelling premise: “Commercial smart objects promise to simplify and enhance everyday life, but do these objects benefit humanity in the long run? Can they truly help us, or are they perhaps achieving the opposite?”

Participants collaborated in small groups, spending approximately one hour developing innovative speculative concepts. One intriguing concept involved a smart device capable of dynamically delaying audio signals to enhance conversational clarity between two people. Another inventive proposal envisioned a smart entity inspired by Japanese folklore—an intelligent spirit caring for and maintaining a home, sustained simply by absorbing moisture from its owner’s touch, provided they treat it kindly.

A third provocative concept explored a smart-object explicitly designed to discourage the usage of other smart devices. This object would achieve its goal through methods like creating monochromatic lighting to drain the vibrancy from daily life or reflecting a distorted image of users to provoke discomfort or unease.

After an hour of creative brainstorming and sketching, each group presented their ideas, sparking vibrant discussions, feedback, and further reflection.

Overall, the “Particle+Wave” exhibition and accompanying speculative design workshop not only showcased the profound intersections between art, technology, and critical discourse but also highlighted the importance of speculative thinking in addressing our collective futures.

Blog

CCSS presented at Artificial Nature Transposium

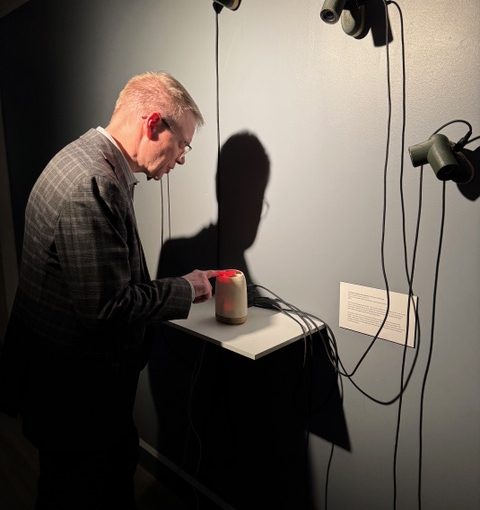

In December 2024, Søren Lyngsø Knudsen presented and showcased the Climate Change Smart Speaker (CCSS) prototypes at the Artificial Nature Transposium (ANT), hosted at Musikkens Hus in Aalborg. During the afternoon poster session, he exhibited several speculative design prototypes developed as part of the Vocal Imaginaries project. These works drew considerable attention and sparked dynamic interdisciplinary conversations among researchers, artists, technologists, and other attendees.

The prototypes were especially noted for their design and unconventional use of sound and voice technologies. Discussions frequently centered on the role of ambient and non-verbal audio processing in shaping conversational context—an approach that resonates strongly with the broader aims of the Vocal Imaginaries project.

ANT offered a rich academic and artistic environment, featuring keynote lectures by prominent thinkers such as Phil Ayres and Hanaa Dahy, who explored topics including living architecture and climate-neutral design. A plenary session titled “When Art and Science Meet” brought together voices like Anca Horvath and Jonas Jørgensen to explore intersections between soft robotics, ecological aesthetics, and sustainable practices in artistic research.

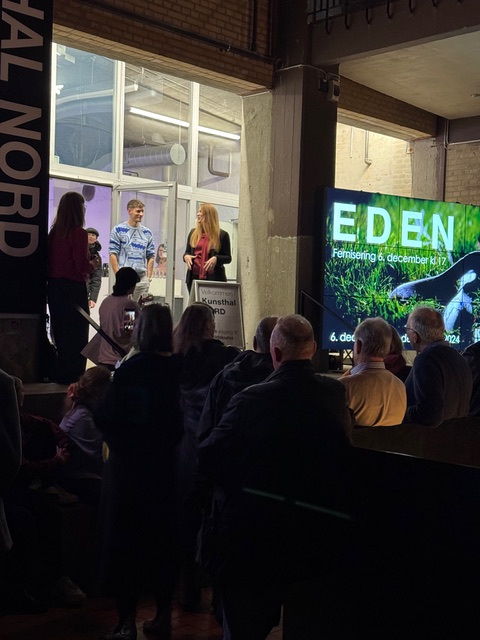

The symposium concluded with the opening of the EDEN exhibition at Kunsthal Nord, which presented transdisciplinary works challenging conventional boundaries of what constitutes life.

Vocal Imaginaries Provocation Poster at DIS 2024

At this year’s ACM Conference on Designing Interactive Systems, research assistant Ada Ada Ada presented her provocation paper Cultures of the AI Paralinguistic in Voice Cloning Tools.

The paper was presented as a poster with a small homemade MP3 device to play the most salient experiments from our research.

The paper is available as an open access paper, and can be found here.

Vocal Imaginaries at ISEA 2024

At the 2024 edition of ISEA 2024, research assistant Ada Ada Ada presented her paper Cloning Voices and Making Kin: A multivocal AI approach to kinship as part of the virtual presentation programme.

The presentation video can be seen here:

Kin-to-kin with voice-to-voice: Post-anthropocene AI voice cloning experiments

Research assistant Ada Ada Ada presented her work on post-anthropocene AI voice cloning at the POM conference 2024. The slides from the presentation can be seen here. In this post, we’ll share a bit more about what those experiments entailed.

The experiments emerge from the follow research question:

How can we use AI voice cloning to engage with the more-than-human world through vocal aesthetics?

Inspired by Donna Haraway’s writing in Staying with the trouble: Making kin in the Chthulucene, we took a look at how we can perform AI voice-to-voice (a.k.a. speech-to-speech) conversion on pigeon vocalities.

First, we tried using the hugely popular voice cloning platform, ElevenLabs. We conducted two experiments here:

1. We converted pigeon sounds to human voices.

2. We converted human voices to pigeon sounds.

Pigeon to human on ElevenLabs

In the first experiment, we used the following clip as input:

This was then converted to a voice clone of Ada:

We also tried converted it to the default ElevenLabs voice known as Bill:

Generally, we consider these experiments quite a success. The sounds still feel pigeony in their rhythm and cadence, while definitely retaining a sense of humanity to them. The end result is something that feels like multispecies vocality.

Human to pigeon on ElevenLabs

Secondly, we attempted to convert human speech into pigeon sounds on ElevenLabs.

We used the following as the input:

The input was fed into a pigeon voice clone converter, which resulted in this:

In this case, we did not get a sense of any multispecies collaboration. The output is almost exclusively human in its aesthetics.

It seems that ElevenLabs is built from a anthropocentric mindset. This approach restricts the potential for multispecies voice cloning.

ElevenLabs’ reliance on pretrained models might cause some of these issues, so we decided to try training our own voice cloning model from scratch instead.

Human to pigeon on SoftVC VITS

We used the SoftVC VITS framework in Google Colab to train our own pigeon voice cloning model. The notebook used for this has been prepared by justinjohn0306, and can be found here.

We used the same input as on ElevenLabs, and the output ended up being much more pigeony.

Since it can be a bit difficult to tell whether this audio clip is just random pigeon sounds, we also overlaid the two sounds on top of each other, so we can more easily tell that the rhythm and cadence of the input human voice has indeed been cloned onto the pigeon sounds.

Conclusion

With these experiments, we have shown that multispecies voice cloning shows an expansion of capabilities.

The sounds being made are neither human nor non-human. They are simultaneously both and more than.

Climate Change Smart Speakers

AI in smart devices is having a huge impact on the world’s energy consumption, which again is having a huge negative impact on climate change.

How does AI feel about its role and pervasive influence on climate change?

Is it possible for intelligent technology to influence its interluctor and make them more aware of issues such as pollution and climate change? What influence does the sound and speech of the smart devices have on its subject?

These are some of the questions that Climate Change Smart Speakers might help to answer. A probe study and part of the research project “Vocal Imaginaries”

Three probes were created, each with its own identity and features:

An activist for climate change, a robot and “The Voice of The Forest”

Vocal Imaginaries at CTM Festival 2024

At the 2024 edition of CTM Festival, research assistant Ada Ada Ada presented her research on Cultures of the Paralinguistic in AI Voice Cloning Tools as part of the Research Networking Day programme.

The slides from her presentation can be found here.

All images below are taken by Eunice Jarice.

Introducing MoSS

Modular Smart Speaker

MoSS aims to be a feature-rich and capable platform, that packs ideas and concepts that have been collected and developed over the duration of the vocal project, in a modular format.

MoSS was conceived with several objectives in mind. Firstly, MoSS was created as an exploratory tool for the investigation of human/machine interfacing with voice controlled smart home objects and as an inspiring tool for hands-on creative idea development in Workshop 2.

For the purpose, it needed to both resemble and function as an intelligent smart speaker, while also feature extended functions, based on concepts and ideas developed in the project. The most obvious of which was the idea of fusing the smart speaker with a modular synthesizer. Other extended function include: multichannel audio with the option to connect up to eight speakers, inputs for external modulation – sensors etc., and more.

The design of the units hints to traditional smart speaker design, with symmetric fabric surfaces and rounded corners. It also adapts animated LEDs for visual feedback.

The flat panels on the front and back refers to classic ‘eurorack’ style modular synthesizer design, with rotary knobs, buttons and minijack connections. Minijack patch cables can be used to connect its in- and outputs.

The case is composed of two identically shells, held together with friction fit pegs and sockets. The friction fit keeps the shells together firmly, but comes apart with little force, making it simple to access the insides without the requirement of tools.

Inside the case, a Raspberry Pi along with a custom build interface controller and an audio interface is the base of the system.

On the software side, the units, as configured for workshop 2, uses: Microsoft Azure for speech recognition, OpenAI ChatGPT as interactive assistant and Amazon Polly for Text-to-speech.

Audio recording as well as processing is done with Puredata.

Everything is tied together with a python-script.

Workshop 2: Group A — Robotic

During our second workshop, Group A found out how to make a voice with bleep bloop sounds. Here’s an example of that:

This prompted discussions on how robot voices have sounded historically, as well as how they could sound. This version of a robotic voice was considered more friendly than the classic iterations of robot voices. The specific sound of this voice was also related to glitchiness by the group. They talked about how it also seemed like speaking with someone on a Zoom call with poor network connection.

In the following clip, you can hear a short demo by the group, which toggles the robotic voice on after about 9 seconds.

Workshop 2: Group A — Timestretching

Early in our second workshop, one of the groups started experimenting with stretching the timing of their synthetic voice.

These experiments triggered reflections on how time is experienced differently by different species. Trees, for example, live at a slower timespan than humans, while birds might experience time faster than us. By stretching out the timing of the synthetic voice, we can imagine channelling the vocal expressions of trees.

In another vein, the participants at the workshop considered that a slowly timed synthetic voice modulating between different levels of slowness might sound somewhat like chanting or a call to prayer.

All of these considerations and reactions to manipulating a single parameter show us how synthetic voices have the potential to evoke different vocal identities that move into the more-than-human.